AUTOGENAI: Designing a Human-in-the-Loop Workflow for Federal RFP Automation

June 2025

Enable proposal teams to streamline RFP responses while maintaining full compliance control through human-in-the-loop design

01 / Project overview

I led the design of an AI-powered workflow that automates RFP analysis and response outline generation for US federal contracting. Over a 6-week period (1 discovery sprint + 2 technical sprints), I guided the design from initial research through to beta release. From the outset, the project was framed around human-in-the-loop design: ensuring that automation accelerated the process while preserving compliance accuracy, user trust, and human judgment.

02 / Challenge

Federal RFPs are dense, compliance-heavy documents. Bid writers typically can spend 2–3 days manually reading, extracting requirements, mapping sections, and building response outlines and compliance matrices. This manual process creates bottlenecks, increases error risk, and slows down proposal timelines.

The challenge was not only to automate these steps, but to design an AI workflow that users would trust. In a high-stakes compliance environment, automation without human oversight is unacceptable. The solution required a collaborative model where AI accelerates extraction and structuring, and humans validate, refine, and ensure compliance.

03/ Opportunity

The project was driven by a clear business goal: supporting the company’s expansion into the US market, and in particular the high-value Federal bidding space. Federal RFPs are complex, compliance-heavy, and time-consuming—creating a strong need for tools that can accelerate the process without sacrificing quality.

By addressing this customer pain point, we had the opportunity to:

Deliver a workflow that cut days off proposal preparation.

Build trust by ensuring outputs were accurate, auditable, and compliant.

Differentiate ourselves from competitors by balancing speed, compliance, and quality.

Strengthen our sales narrative by aligning directly to a known customer requirement.

This project was therefore not just a design challenge, but a strategic opportunity to create competitive advantage and unlock growth in a new market segment.

04/ Process

With only a single discovery sprint to understand the problem space and pull together a design I leveraged internal experts, existing research and insights and AI prototyping tools to accelerate my progress.

1. Understanding Workflows & Risks

Conducted stakeholder interviews with internal bid professionals from our US team to uncover pain points, trust thresholds, and compliance concerns. With the help of a bid expert I attempted to replicate the task of manually extracting requirements and building a response outline structure from sample RFPs.

Observed current workflows to identify which tasks could safely be automated and where human checkpoints were essential.

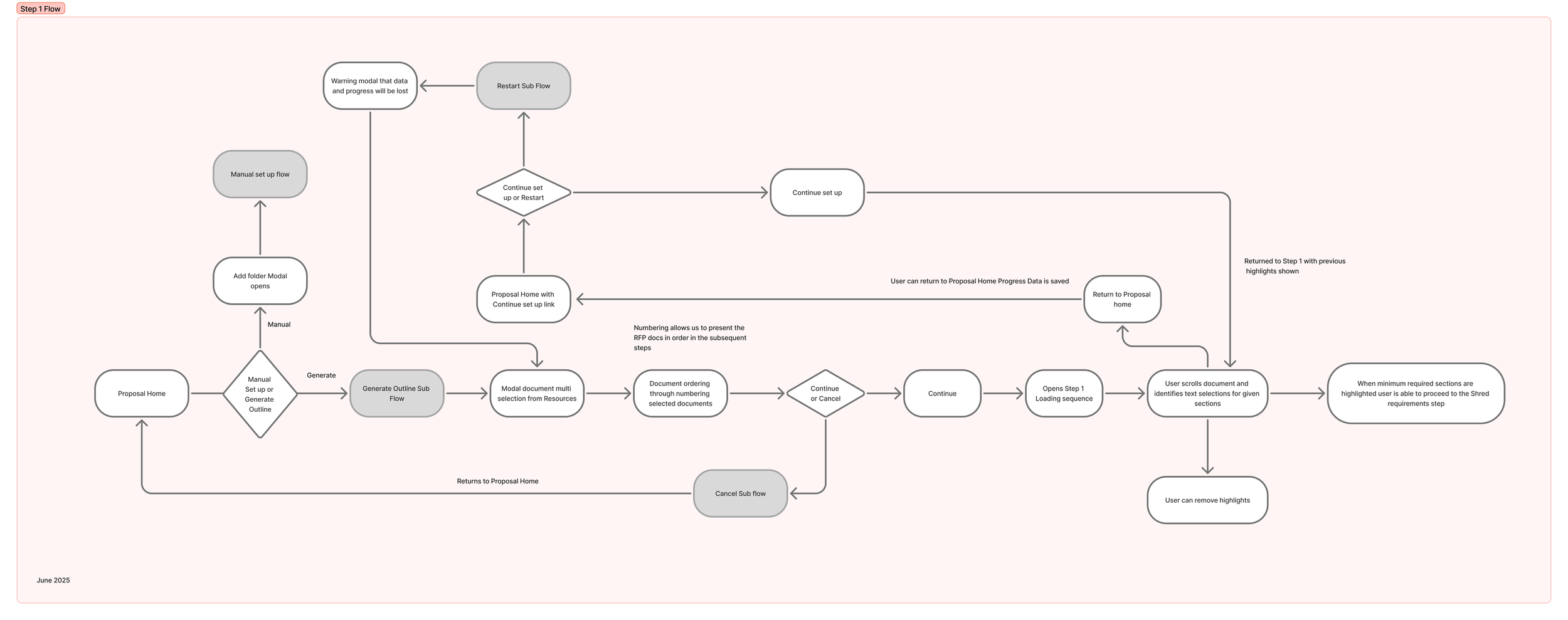

Collaborated with technical lead and AI engineers to map the flow from document ingestion → parsing → requirement extraction → outline generation, overlaying intervention points for human review.

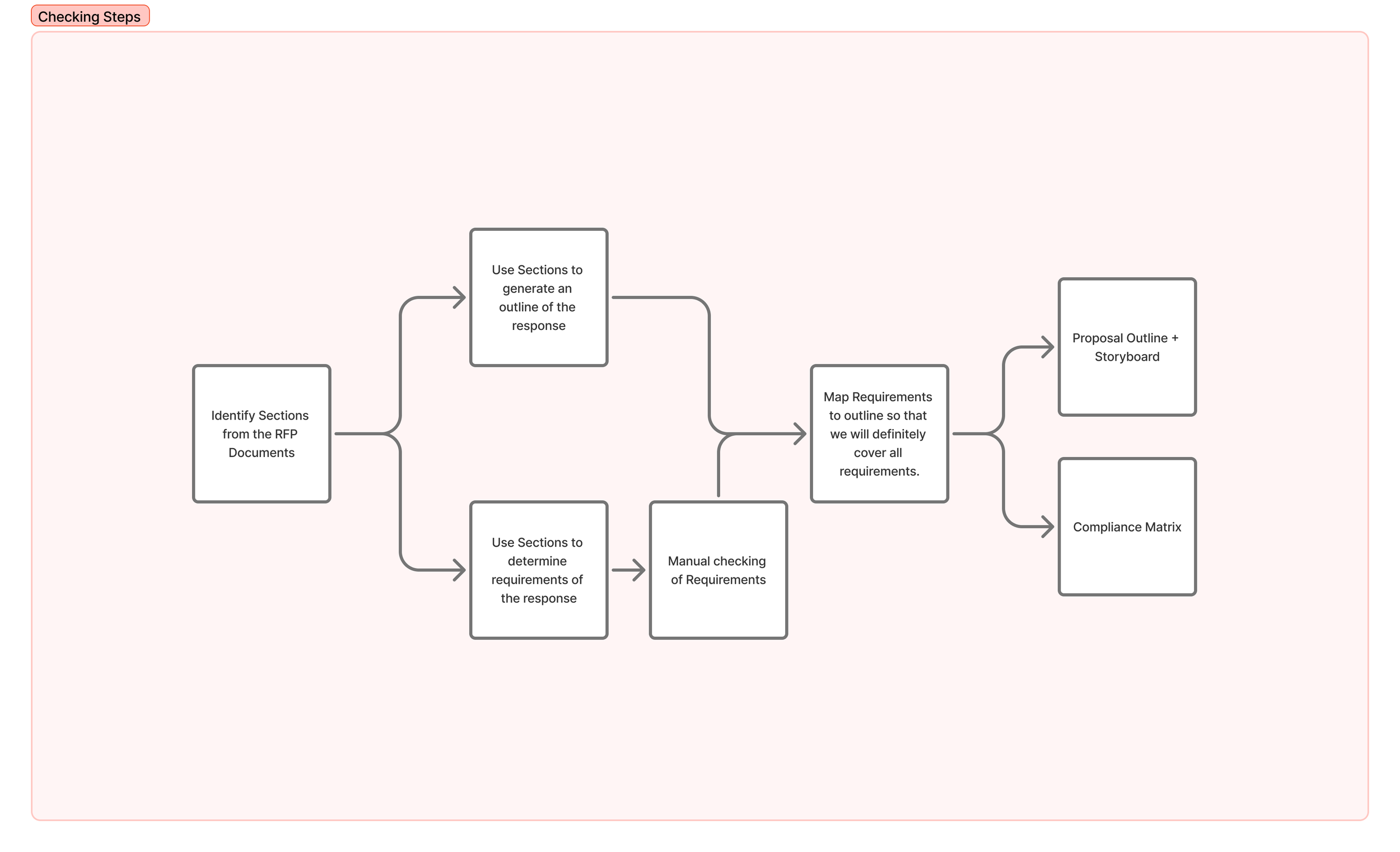

A simplified high level flow showing the automated vs manual steps

2. Validating Data Foundations

A key principle when designing with generative AI is to first explore the boundaries of what the model can and cannot do. Before committing to interface design, I made it a priority to understand the accuracy, consistency, and structure of the possible outputs. I began by assessing how well requirements could be identified and categorised.

Requirement Extraction Modelling: Worked with engineers to generate early sets of AI-extracted requirements.

Data Quality Review: Evaluated whether outputs aligned with the standard US federal bid structure, checking accuracy, completeness, and categorisation.

Findings:

When looking at the test generation data I saw a couple of challenges. The LLM often misinterpreted requirement boundaries: sometimes combining two distinct requirements into one, other times splitting a single requirement into multiple fragments. This led me to consider adding in editing functionality for users to ‘clean up’ requirements if needed prior to generating the response outline.

3. Journey Mapping and lo-fi wireframes

I mapped end-to-end user flows that alternated between automation phases and guided validation steps and shared these internally for review before moving into a quick lo-fi wire framing exercise (done as a group exercise with the product trio) to visualise the flows and functionality across the views.

4. Rapid Prototyping & Validation

The screens I designed included complex interactive elements, so I leveraged newly available AI-powered prototyping tools to create high-fidelity, testable prototypes. This allowed me to validate interactions and gather feedback at speed without waiting for full development.

Figma Make for Speed: Leveraged Figma Make to rapidly create high-fidelity prototypes of critical workflows.

Team Testing: Used these prototypes to present and test ideas with cross-functional team members, quickly gathering feedback on flows, interactions, and usability.

Client Validation: Shared selected prototypes with Design Partnership clients (clients who have signed up to help us with discovery in return for early access to beta features), enabling us to validate assumptions with real bid writers and gather early feedback on usability, trust, and fit within existing workflows.

Rapid high fidelity prototyping with Figma Make

05/ Solution

The result was an end-to-end human-in-the-loop workflow for RFP response preparation. The design intentionally alternated between AI taking control in automation-heavy phases (requirements extraction, outline structuring) and humans exercising agency in validation steps. Guided checkpoints signalled to users where their expertise was most needed for example, validating requirements, correcting categorisation, and ensuring compliance fit.

The workflow comprised four main stages:

Step 1 – Document Ingestion

Users upload their RFP documentation, which is parsed by the system and displayed in the platform for review. This allows them to confirm that all files are present and in good condition. To improve downstream quality, users are also encouraged to quickly flag broad sections of their documents (page ranges) that are likely to contain requirements. Testing showed this step significantly improved extraction accuracy.

Step 2 – Requirement Extraction & Validation

The system identifies requirements and aligns them to a typical federal bid structure. Extracted requirements are displayed within the context of the RFP documents, with colour coding to support scannability. At this stage, users are equipped with tools to clean up the AI’s output—merging or splitting requirements, correcting categorisation, and ensuring none are missed—before moving forward.

Challenge: The original design called for colour-coded highlighting of requirements directly within RFP text, closely mirroring how writers naturally annotate documents. Due to technical limitations, the MVP shipped with chunk-based selection instead, which made precise highlighting more difficult. While this compromise allowed the feature to launch on time, highlighting remains a future design goal to better match user mental models and deliver a more intuitive validation experience.

Step 3 – Outline Structuring

From the validated requirements, the platform generates a draft response outline. Users can refine the structure through drag-and-drop reordering, renaming, or adding sections. Each section includes a storyboard that brings together the associated question, linked requirements, and supporting information—providing the right context for both the writer and the AI when drafting responses.

Step 4 – Compliance Assurance

Finally, users review an automatically generated compliance matrix, showing requirements mapped to response sections in a single, auditable view. This step reinforces transparency and ensures the proposal is fully traceable against the original RFP.

06/ Impact

Beta Release: Launched to early users, who responded positively to the ability to move faster without losing control.

Client Validation: Discovery Partnership clients played a key role in testing early prototypes, providing feedback that shaped critical HITL features such as requirement clean-up (merge/split/delete) and multi-category assignment. This early involvement increased confidence in adoption.

Adoption Drivers: Feedback confirmed that users valued how the workflow clearly signalled when their expertise was needed. This reinforced their sense of control while reducing the burden of unnecessary oversight.

Trust in Collaboration: Users reported greater confidence in AI outputs when they understood the alternating flow of AI handling automation and humans providing quality assurance.

Evolving Expectations of AI: Testing also revealed that users expect a great deal from AI often assuming it should deliver faster and higher-quality outputs than current capabilities allow. While the workflow cut days off the process, users still pushed for greater automation. At the same time, they stressed the importance of retaining oversight and trust. This tension—between the promise of automation and the need for human control—remains a central design challenge for future iterations.

06/ Key Takeaway

This project demonstrates how human-in-the-loop design transforms automation from a risk into a partnership. By intentionally designing intervention points, transparency mechanisms, and override controls, we created an AI system that bid writers could trust in a high-stakes compliance environment.